Blogs

Stories, news, and announcements from uCertify Team

In the spotlight

New & Popular Post

In the spotlight

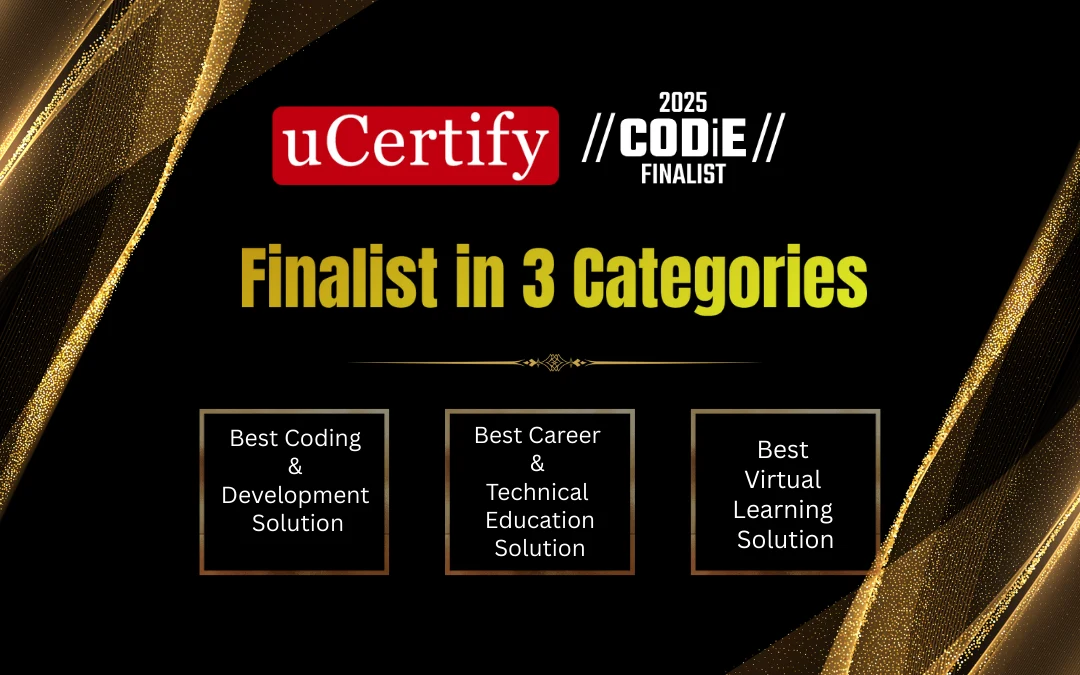

uCertify Named Finalist in 3 Categories at Prestigious 2025 SIIA CODiE Awards

Have you heard? We are thrilled to announce that uCertify has been a finalist in three categories for the most renowned 2025 SIIA CODiE Awards for the 12th year in a row. This achievement showcases our unwavering commitment to keep on delivering excellence & innovation in educational technology. The SIIA CODiE awards are a prestigious as well as demanding competition that honors the best products as well as services in education as well as business technology. Best Categories in SIIA CODiE Awards We are thrilled that our platform & solutions were picked out as finalists in the following categories: Best Coding & Development Solution Best Career & Technical Education (CTE) Solution Best Virtual Learning Solution The CODiE Awards set the industry standard for excellence in educational technology. We feel extremely honored to be recognized in these crucial areas, which demonstrates our dedication to transformative learning experiences. We eagerly await the...

Industry Trends

View AllWhy Hands-On Labs Matter More Than Videos: A Deep Dive into uCertify’s CloudLAB & LiveLAB

Read moreWhy Data Analysis is the Career of the Future?

Read moreWhat Is Machine Learning? Explained for Absolute Beginners

Read moreUnlock Your Software Quality Potential with uCertify!

Read moreUnlock Your Database Potential with MySQL!

Read moreUnlock the Secrets of Digital Forensics with uCertify!

Read moreUnlock the Benefits of Inclusion with uCertify!

Read moreUnleash Your Coding Potential with uCertify’s C# 10.0 All-in-One Course

Read moreUnderstanding the Difference-Data Analytics and Data Science

Read moreUnderstanding Cybercrime and Cybersecurity: A Wake-Up Call for the Digital Age

Read moreuCertify’s Halloween Day Sale is Live Now!

Read moreuCertify’s Columbus Day Sale is Live Now!

Read moreuCertify New Year Sale is Live Now!

Read moreuCertify Independence Day Sale. Avail 30% Off. Rush now

Read moreuCertify Independence Day Sale. Avail 25% off. Rush now

Read moreuCertify in Association with McGraw-Hill Education

Read moreuCertify Black Friday Blowout: Massive Discounts on ALL Courses!

Read moreuCertify Back to School Sale: 30% off. Rush now!

Read moreThe Ultimate Guide to Multi-Cloud Strategies

Read moreSteal The Deal: Save 30% at MLK day SALE

Read moreIT & Software

View All7 Best IT Certifications to Get Hired in 2025

Read moreGuide to Best CompTIA Certifications: Your Path to IT Success

Read moreuCertify Black Friday Blowout: Massive Discounts on ALL Courses!

Read moreiOS Development in 2024: A Complete Guide for Beginners

Read moreEditor's Choice

View All10 Best Cybersecurity Certifications for High-Paying Jobs

The need for upskilling and acquiring top cybersecurity certifications is no longer invisible. Every second, a digital battle rages where...

AI in Cybersecurity: 5 Must-Have Skills to Stay Ahead

AI in Cybersecurity is no longer a futuristic concept—IT’S HERE! And it’s transforming how cybersecurity operates across the board. From...

uCertify Successfully Achieved ISO/IEC 27001:2022 Certification

LIVERMORE, California, May 01, 2025 — uCertify Training & Learning Pvt Ltd has achieved ISO/IEC 27001:2022 Certification, the globally recognized...

7 Best IT Certifications to Get Hired in 2025

Cracking a good job offer can feel like a daunting task. The journey to the top might seem far to...

Guide to Best CompTIA Certifications: Your Path to IT Success

CompTIA was created in 1982 as the Association of Better Computer Dealers (ABCD), a trade group originally focused on representing...

xAI Announces Grok-Powered Chatbot for E-Commerce Websites

xAI launches a Grok-powered chatbot for e-commerce on March 19, 2025, increasing sales by 25% and reducing support costs by...

Cybersecurity Startup Launches AI-Driven Web Protection Suite

WebGuard AI, launched on March 18, 2025, protects websites with AI, blocking 98% of threats and reducing breaches by 80%.

Tech Firm Releases Web Development Framework with AI Integration

SmartWeb 2025, a new AI-integrated web development framework, launches on March 17, 2025, reducing development time by 40% and boosting...

New AI Tool Enhances Web Accessibility for Developers

AccessAI, launched on March 16, 2025, helps developers enhance web accessibility, improving compliance by 80% and user engagement by 40%.

uCertify Partners with xAI to Integrate Grok into Web Development Courses

uCertify partners with xAI on March 15, 2025, to integrate Grok into web development courses, improving learning outcomes by 40%...

Cloud Computing Giant Expands AI-Driven Services

Cloud computing giant launches CloudAI Suite on March 14, 2025, offering AI-driven tools that reduce training time by 50% and...

Blockchain Startup Launches Supply Chain Solution

ChainTrack, a blockchain supply chain solution, launches on March 13, 2025, reducing fraud by 60% and tracking time to 1...

Be the First to Know!

Get exclusive course previews, industry insights, and offers that’ll make you (and your wallet) happy, delivered to your inbox.

Office Productivity

View AlluCertify New Year Sale is Live Now!

Read moreuCertify Black Friday Blowout: Massive Discounts on ALL Courses!

Read moreWhy Data Analysis is the Career of the Future?

Read moreThe Ultimate Guide to Multi-Cloud Strategies

Read moreGetting Started with Data Visualization: The Fundamentals

Read moreDevelopment

View AllComputer Terminologies Decoded: The Ultimate Beginner’s Roadmap

Read moreThe Importance of Business Terminologies in Effective Communication

Read moreUnderstanding Server Components vs. Client Components in Modern React Applications

Read moreGetting Started with SQL: From Basic Queries to Data Insights in 2025

Read moreProgressive Web Apps: Building the Next Generation of Web Applications

Read moreBreaking Down IT Myths Through Tech+ Learning

Read moreBoss-Level Training for Your Team

With uCertify Business, you get courses and tools that sharpen skills and increase productivity.

Request a DemoNext in AI & Big Data

View AllIs Artificial Intelligence Hard to Learn? Honest Answer for Beginners

Read moreHow Businesses Are Using Artificial Intelligence to Increase Revenue

Read moreMachine Learning for Finance: Skills You Need in 2026

Read moreNo-Code AI vs Traditional AI Development: Which One Is Right for You?

Read moreWhat Is Machine Learning? Explained for Absolute Beginners

Read moreAI with Python vs Machine Learning: What Should Beginners Learn First?

Read moreDoS Attacks and Infrastructural Vulnerabilities: What You Need to Know

Read moreWhy Hands-On Labs Matter More Than Videos: A Deep Dive into uCertify’s CloudLAB & LiveLAB

Read morePower BI vs. Tableau: Which Data Visualization Tool is Right for You?

Read moreProfessmo AI Tutor: The Future of Smarter, Customized Learning

Read morePress Release

uCertify Launches New Hands-On Certification Programs For In-Demand Careers

uCertify Showcases its Interactive ICT Learning Solutions at SkillsUSA NLSC 2025

_000xVh.png)

uCertify Successfully Achieved ISO/IEC 27001:2022 Certification

xAI Announces Grok-Powered Chatbot for E-Commerce Websites

Cybersecurity Startup Launches AI-Driven Web Protection Suite

Tech Firm Releases Web Development Framework with AI Integration

New AI Tool Enhances Web Accessibility for Developers

uCertify Partners with xAI to Integrate Grok into Web Development Courses

Cloud Computing Giant Expands AI-Driven Services

Blockchain Startup Launches Supply Chain Solution

New Cybersecurity Platform Blocks 99% of Threats

Tech Firm Announces 5G-Powered IoT Solution

Tech Giant Announces Breakthrough in Quantum Encryption

uCertify Unveils Next-Gen AI Tool for Developers

_0011ee.webp)

_0010QW.webp)

_000uBq.png)

_000zYf.webp)

_000WTL.png)

_000wqD.png)

_000wdX.png)

_000wFq.png)

_000wFa.png)

_000wYV.png)

_000wXY.png)

_000wVr.png)

_000wUE.png)

_000wtl.png)

_(1024_x_512_px)_000wSc.png)

_0011ha.webp)

_0011E4.webp)

_0011fP.webp)

_0010Yb.webp)

_0010gg.webp)

_(1)_0010G8.webp)